𝐈𝐧𝐭𝐫𝐨 𝐭𝐨 𝐂𝐨𝐧𝐭𝐚𝐢𝐧𝐞𝐫 𝐍𝐞𝐭𝐰𝐨𝐫𝐤𝐢𝐧𝐠

Chronicles of Containers - Part 6

Hey there! 👋

As promised last week, I’m switching gears a bit and moving back to deep-dives in containers :) If you are curious on “WHY” am I switching contexts, it’s actually quite simple - I’m experimenting with this approach where I spend a week or two exploring a topic, and just when I reach the peak of “wow, I can draw a ton of stuff on this topic”, I cut it off and move to different topic. My reasoning is that I achieve two things: 1) I leave my subconcious to further process what I learned until then and 2) I never get bored by spending months on same thing :)

Anyway, back to containers. Surprisingly or not, I presumed that Container Networking will be a rather simple topic to cover. And I was dead wrong. As I eventually learned, there’s SO MUCH under the hood and I’m just getting warmed up.

One thing that actually fascinated me is that, by default, Containers have no Network Adapters at all! And yes, it surprised me because I thought you magically get it out of the box. Nope. Doesn’t work that way. So, how DOES it work? Well, as usual, the image goes first and then some more details:

(click on image to view in full size)

💡 𝐄𝐚𝐜𝐡 𝐜𝐨𝐧𝐭𝐚𝐢𝐧𝐞𝐫 𝐠𝐞𝐭𝐬 𝐢𝐭𝐬 𝐨𝐰𝐧 𝐍𝐞𝐭𝐰𝐨𝐫𝐤 𝐢𝐬𝐨𝐥𝐚𝐭𝐢𝐨𝐧 - one of the beauties of container isolation is that, among your own Task List, File Tree and Registry, you also get your own "Network Namespace". From Container's POV, it has no clue it's in Container, so all you see from the INSIDE is zero or more container-specific network adapters. What you see from the OUTSIDE (i.e. your Host OS) is array of Network Namespace inside which the containers are locked in.

💡 𝐂𝐨𝐧𝐭𝐚𝐢𝐧𝐞𝐫𝐬 𝐡𝐚𝐯𝐞 𝐧𝐨 𝐝𝐞𝐟𝐚𝐮𝐥𝐭 𝐧𝐞𝐭𝐰𝐨𝐫𝐤 𝐚𝐝𝐚𝐩𝐭𝐞𝐫𝐬 - this came to me as a surprise. Even though they are isolated, by default, you don't really get any networking capabilities. Executing "ipconfig" is likely to return nothing. That's where a "Container Networking" stuff kicks in.

💡 𝐂𝐨𝐧𝐭𝐚𝐢𝐧𝐞𝐫 𝐍𝐞𝐭𝐰𝐨𝐫𝐤 𝐈𝐧𝐭𝐞𝐫𝐟𝐚𝐜𝐞 𝐢𝐬 𝐚 '𝐬𝐭𝐚𝐧𝐝𝐚𝐫𝐝' - CNI is nothing more than a framework. A framework that defines a format for specifying network adapters, plugins which are binaries that set up the networking based on the specification and commands that can be executed against a container in order to enable/disable adapters. And even though it’s not ‘standard’ per se, it seems to be the most commonly used approach to configuring container networking.

💡 𝐂𝐍𝐈 machinery 𝐢𝐬 𝐜𝐚𝐥𝐥𝐞𝐝 𝐨𝐧𝐜𝐞 𝐭𝐡𝐞 𝐜𝐨𝐧𝐭𝐚𝐢𝐧𝐞𝐫 𝐢𝐬 𝐬𝐭𝐚𝐫𝐭𝐞𝐝 - as soon as container is up & running, the container runtime (e.g. containerd, rkt, etc.) will invoke CNI. CNI then loads the specification file, looks up WHAT needs to be setup, and invokes actual plugins to do the dirty work (e.g. add & configure actual network adapters inside container).

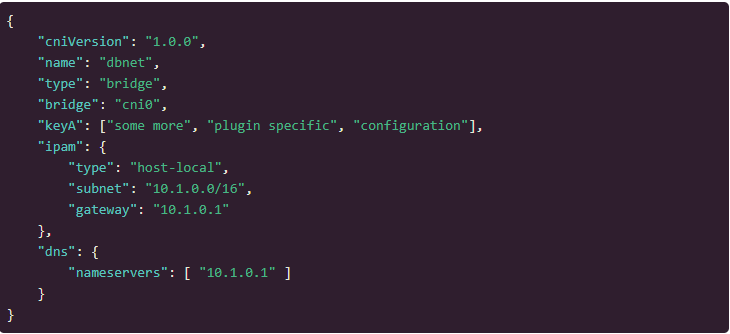

Here’s an example of how that config file might look like:

(Image source: https://www.cni.dev/docs/spec/#add-example)

As you might have guessed, this is a CNI specification that instructs CNI machinery to invoke a plugin called “bridge” (which really is just an executable called “bridge.exe” in plugins/ folder), pass it the config params from above, and expect that it will configure the network bridge.

And that’s about it. At least as far as Introductory part is concerned. In the next article I will dig a level deeper into CNI, concepts that it defines, plugins and common operations.

Until then, if you missed some of the previous articles, you might find them at the bottom of the page. If you haven’t subscribed yet, now would be a good time to do so:

💡💡 P.S. You should probably know that Docker has it’s own machinery for dealing with Networking. It CAN use CNI, but it has it’s own ways and you can read about them here.

Other articles in the Container series:

How do COWs (Containers on Windows) work? (Part 5 of the series) - specific focus on running containers natively on Windows.

What is Container Network Interface (CNI)? (Part 7 of the series) - deeper-look into how networking is configured at runtime.

What's inside the Container Image? (Part 8 of the series) - exploring & describing image content.